Reliable messaging talk by Udi Dahan

Consider the blog post Life without distributed transactions or the talk Reliable Messaging Without Distributed Transactions by Udi Dahan

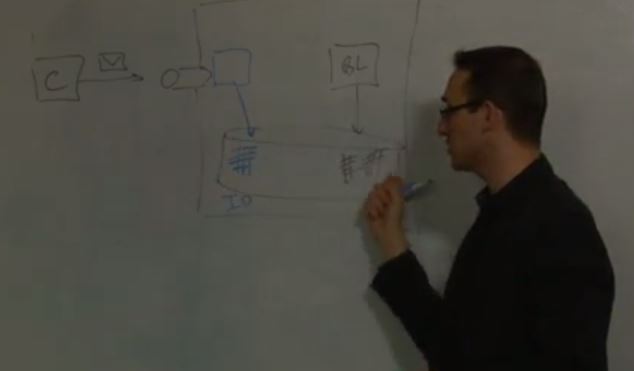

This discusses problems around the fact that updates to a database and the persistent messaging system can't be in the same transaction.

He describes a solution for which he says "there are lots of moving parts". It's actually a complex inefficient solution to a non-problem!

He proposes there be a table of message ids in the database to detect duplicates, and a table of messages in the database for the purpose of replaying the sending of outgoing messages. If a message handler finds that the incoming message is a duplicate it needs to replay the sending of all the outgoing messages, and it needs to set the message id on each of these outgoing messages so they have the same message ids so that the subscribers to the outgoing messages doing the same de-duplication logic work properly. If the incoming message is not a duplicate then the handler must update the table of message ids and the table of messages that may need resending in addition to the master data, and all these updates must be in a single atomic transaction on the database. Outgoing messages are only sent after this transaction commits. Finally the input message can be acknowledged.

He doesn't mention the need to clean up the table of outgoing messages to replay, and the fact that this can't be done unless it is known that the acknowledge of the input message has been received and the removal of the message from the messaging system has committed.

Distributed transactions are of course an anti-pattern and should never be used. Most of what he says is spot on, but he's solving a problem created by the anti-patterns involved with using a persistent, unordered, at least once message bus in the first place.

Towards of the end of the talk he says (paraphrasing slightly):

The only way to get to reliability is to analyse the code line by line and ask what happens if the server crashes here. But how test it to know it's bullet proof? It's very difficult so we have delayed until NServiceBus 5 (rather than 4). We need an automated test that determinsitically stops at every single line and kills the process, starts the process up again, and asserts that the state is correct. If you thought unit testing was difficult then this is the next level of difficult. You want to deal with any scenario, if you crash at any line of code then the system will behave correctly. That's ultimately what makes the DTC powerful and useful because it solves all those problems. But most people don't like the DTC because they don't realise what the alternative is, of actually trying to build it themselves. The assumption is just use a message id table to detect duplicates and you're done. It's not. If you build on top of RabbitMQ then you need this.

He admitted the solution is complex and difficult to test and taking time to release. It's sad he doesn't realise it's a non-problem. It sad that he entertains solutions where (as he says) almost every line of code has to be tested as a potential point of failure.

The CEDA replication system synchronises databases for any schema without distributed transactions and without persisting messages. If it used the approach described by Udi Dahan it would be hideously complicated and inefficient.

See messaging done right for a much better approach.

By the way he makes a comment about ZeroMQ [ ] which

doesn't seem fair:

] which

doesn't seem fair:

ZeroMQ supports best effort, and if you want a high level of reliabilty ZeroMQ won't work for you. You can't compensate for lack of durability, without reimplementing that yourself, and that's a waste of time.

He's actually talking about ZeroMQ publish-subscribe which is only one of the ZeroMQ message patterns, and not the appropriate one when "you want a high level of reliabilty", yet he appears to dismiss ZeroMQ without qualification.